Stable by Design: How Task-Based Pools and Predictable Rotation Reduce Platform Flags

You buy more proxies, wire up “smart rotation”, and spread traffic across a big shared pool.

On paper you’re safer: more IPs, wider regions, fewer hits per exit.

In reality:

– New accounts still get flagged in batches.

– Old accounts suddenly see a wave of captchas.

– When one task misbehaves, whole regions start feeling unstable.

The core issue usually isn’t “too few IPs”. It’s that all tasks share the same exits and rotate in chaotic ways, so every account ends up inside noisy patterns.

Here’s the direction that works far better in practice:

Build task-based pools and rotate predictably inside each pool, instead of letting every task grab any exit at random.

This article solves one concrete problem:

How to redesign your proxy usage so different tasks stop polluting each other and overall flags go down, without having to triple your IP spend.

1. The real problem: shared chaos, not dirty exits

Most teams start in a similar way:

- One big proxy list for everything.

- A “smart” rotator that picks a random IP per request or per session.

- All account actions, scraping, analytics, and internal tools sit on that same pool.

Typical symptoms:

- When you push scraping harder, logins on unrelated accounts also begin seeing more challenges.

- One misconfigured script fires too fast and gets a chunk of exits rate-limited, which then hurts other workflows that reuse those exits.

- Even with residential or decent datacenter IPs, platform logs show identical patterns: many accounts hitting similar endpoints from overlapping IP ranges with the same noisy timing.

In short: you’re mixing different risk profiles on the same exits, and your rotation logic is making the mixture look even louder.

2. The idea: exits follow tasks, not “whoever asks first”

To reduce flags, exits need a clear job. Not “serve everything”, but “serve this kind of traffic”.

Two design shifts help immediately:

- Task-based pools

– Each pool is dedicated to a distinct job type: login, posting, scraping, reporting, internal tools, etc.

– High-risk actions and low-risk actions do not run through the same exits.

– If one task misbehaves, it hurts its own pool, not your entire operation. - Predictable rotation inside each pool

– Rotation is limited to a small, known subset of exits per task.

– Rotation happens on simple, explainable rules: for example, per session or per fixed time window.

– You avoid “every request uses a completely different IP” noise that screams automation.

You stop thinking “how do I spread traffic across all IPs?” and start thinking:

“For this specific task, which exits should I use, and how can I keep their behavior boring and predictable?”

3. Designing task-based pools: three practical axes

When you define pools, focus on three dimensions:

3.1. By risk level

- High-risk: logins, password changes, payment edits, appeals, account recovery.

– Use the cleanest and most stable residential exits.

– Very limited rotation, often 1–2 exits per account. - Medium-risk: posting content, editing profiles, moderate interaction.

– Use stable residential or good datacenter exits.

– Predictable rotation per session or per time window. - Low-risk: scraping public data, pulling reports, search, internal dashboards.

– Use datacenter exits with higher volume.

– Rotation can be more active, but still structured.

3.2. By geography

- One pool per target region:

US_TASKS,EU_TASKS,SEA_TASKS, etc. - Logins and key actions for a region stick to that region’s pools.

- You don’t let a “US login” occasionally come from an EU scrape exit just because it’s free.

3.3. By product or platform

When possible, avoid using the exact same exits for completely different platforms.

Flags on one site can sometimes bleed into reputation signals elsewhere.

A simple rule that already helps:

One task type × one region × one platform = one dedicated pool.

4. Predictable rotation: “boring” beats “random”

Random IP per request sounds safer but often looks worse to risk systems.

What you want instead is rotation that:

- Stays within a known small set of exits for that task.

- Changes at clear boundaries: new session, new batch, or fixed time window.

- Keeps each account or worker on the same exit long enough to look like a real user path.

Examples of predictable patterns:

- “Each login session for an account uses the same exit from start to finish.”

- “Scraping batch N uses exit A, batch N+1 uses exit B, then repeats.”

- “Every thirty minutes, reporting workers rotate to the next exit in the pool, not on every single request.”

Inside the pool, keep rotation structured but not rigid:

- Use round-robin or weighted round-robin.

- Add a small bit of randomness in which exit starts first, not in every single pick.

- Track per-exit error rates and take unhealthy exits out of rotation instead of pushing through.

5. A newbie-friendly layout you can copy

Let’s say you have:

- 40 accounts on one major platform.

- Three main tasks:

- Logins and security-sensitive actions.

- Content posting and light interaction.

- Scraping public listings and stats.

You have access to:

- 20 high-quality residential IPs.

- 40 decent datacenter IPs.

Here’s a simple structure that already works better than “one big pool”:

5.1. Pools

- POOL_LOGIN_HIGH

– 10 residential IPs.

– Only used for logins, password changes, payment updates, security checks.

– Each account is assigned 1 primary + 1 backup IP from this pool. - POOL_POST_MEDIUM

– 10 residential IPs.

– Used for posting, profile edits, light interaction.

– Accounts from the same region share a small subset, but you avoid rapid cross-account rotation. - POOL_SCRAPE_LOW

– 40 datacenter IPs.

– Used for crawling lists, prices, stats, and pulling heavy reports.

– Rotation per batch, not per request.

5.2. Mapping accounts

For 40 accounts, you might decide:

- Group them by region first (for example, 25 US, 15 EU).

- For each region: – Divide accounts into groups of 5. – Assign each group:

- 1–2 login exits in

POOL_LOGIN_HIGH. - 1–2 posting exits in

POOL_POST_MEDIUM.

- 1–2 login exits in

Example for one group of 5 US accounts:

- Login:

– Primary: US_RES_LOGIN_01

– Backup: US_RES_LOGIN_02 - Posting:

– Primary: US_RES_POST_11

– Backup: US_RES_POST_12

5.3. Rotation rules

- Logins:

– Each account uses its primary login exit consistently.

– Only switches to backup after clear, repeated network failures, not just one error. - Posting:

– Each posting session sticks with the assigned posting exit.

– For a new session, you may occasionally flip between the two posting exits, but not mid-session. - Scraping:

– Break scrape tasks into batches (for example, 500 URLs).

– Assign each batch to one datacenter exit fromPOOL_SCRAPE_LOWin round-robin.

– If an exit starts throwing errors or getting throttled, pause it and move to the next.

This layout does three things:

- Keeps high-risk actions on the quietest, most consistent exits.

- Keeps noisy tasks like scraping away from the addresses that hold account identity.

- Makes rotation explainable and observable, so you can debug flags by task and pool, not by guessing.

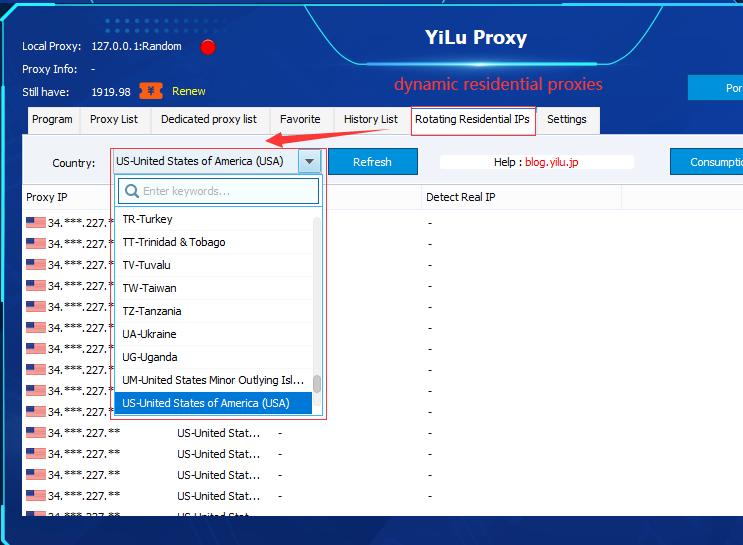

5.4. Where YiLu Proxy actually helps with this design

All of the above is much easier if your proxy provider matches the way you want to structure pools. YiLu Proxy fits this pattern well in practice: you get residential, datacenter, and even mobile exits under one roof, and you can group them by region and purpose instead of managing dozens of random lists. It’s straightforward to create “login-only” residential groups, high-volume datacenter groups for scraping, and regional backups, then reference them in your code by tag rather than hard-coding IPs. On top of that, YiLu’s dashboard gives you latency and success-rate views per node, so you can quickly pull unhealthy exits out of a task pool without touching your application logic. In other words, you design the task-based and predictable-rotation logic once, and let YiLu’s pools supply the right kind of exits underneath.

6. How to know it’s working

You don’t need complex dashboards to see if the new structure helps. Track a few simple metrics:

- Per-task verification rate

– Captchas or extra challenges per 100 logins, per pool.

– If login verifications drop while scraping continues fine, task-based isolation is doing its job. - Exit-level error rate

– Timeouts, blocks, or hard errors per exit.

– Quick spikes on one pool should not affect the others. - Account incident clustering

– Did “bad days” shrink from “everything is on fire” to “only scraping had issues”?

– Are account-level bans more isolated instead of happening in waves?

If you see:

- Fewer platform flags on login and posting pools.

- Higher stability even when scraping volume increases.

- Problems localized to specific pools instead of the whole fleet.

Then your task-based pools and predictable rotation are doing what you wanted all along: giving platforms boring, stable stories for each account, while still letting you run heavy tasks at scale.